| FCoE: Networks & Storage Convergence Click on Executive Summary, Table of Contents and Order Form for additional information. |

||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

|

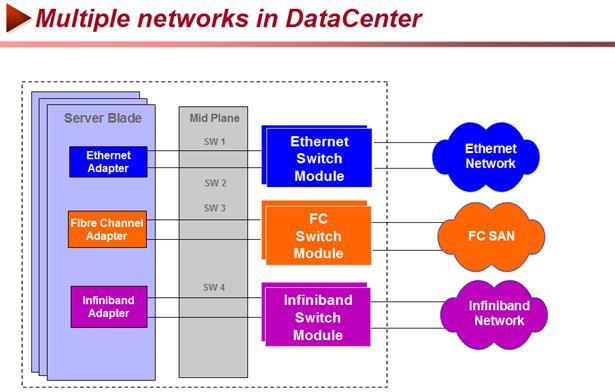

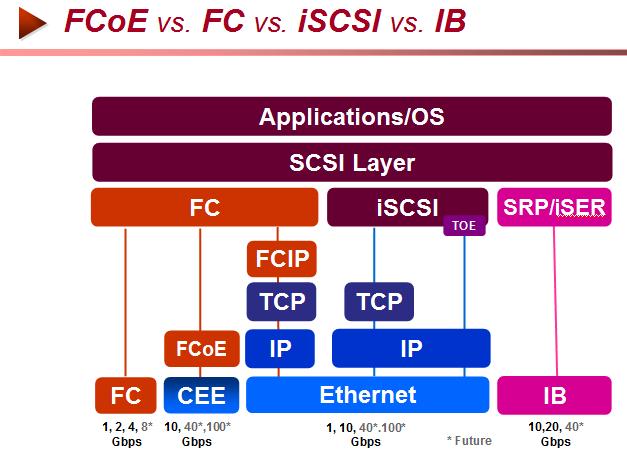

Drivers for the NextGen Datacenter Infrastructure With Ethernet having achieved ubiquity in connecting millions of PCs to servers and the resultant dramatic cost declines of Ethernet adapters and switches have made SMBs and medium size enterprises to gravitate towards Ethernet-based infrastructure for networking and storage equipments. But the lossy nature of Ethernet of dropping packets when network congestion occurs has kept IT managers from running mission-critical business or high-performance technical applications in an all Ethernet-based storage to servers’ environments. As a result datacenters have resorted to three separate networks to meet the specific needs of different workloads – a Storage Area Network (SAN) that used a lossless fabric based on Fibre Channel (FC) Protocol which provided the necessary robustness and performance for storage to servers data traffic, IP for Network traffic and Infiniband (IB) for clustered high performance computing (HPC) requiring low latency. Further a new protocol called FCIP (a point to point wide area connection that tunnels between two FC switches over an extended IP link), allowed transporting FC frames over distances using IP-based networks. On another front, the advent of new technologies such as multicore processors, servers and storage virtualization, high-density blade servers, web 2.0 applications accelerated the demand for higher server I/O performance (both in latency and bandwidth) and adoption of a multiple fabrics for network, storage I/O and cluster computing IPC traffic. Specifically Server Virtualization (through supporting multiple Virtual Machines, multiple applications and Operating Systems on the same physical server) generates significant I/O traffic putting a severe demand on existing multiport 1GbE network infrastructures.

The demand is particularly high on 1GbE storage systems to support the higher I/O rates, greater capacity, and faster provisioning and non-disruptive application delivery. Multi-socket, Multicore Servers support higher workload levels, which demand greater network throughput while shared networked storage in Virtualized Servers requires higher network bandwidth between servers and storage. iSCSI based networks which encapsulates block-level SCSI data into IP packets and run end-to-end over Ethernet became popular as well as the new iWARP protocol, a derivative of Remote Direct Memory Access (RDMA) with low latency to run over 10GbE for high performance clustering applications. Further, the availability of free iSCSI initiator drivers embedded in Microsoft Windows and Linux OS and low cost target drivers in storage disk systems, made iSCSI running on IP based Ethernet become very popular for use in small enterprises, workgroups and remote offices. Over the last 7-8 years large enterprises, have heavily invested in FC SANs, and the last thing they would want to do is rip and replace their robust, albeit expensive investments in FC SANs. Yet they face the conundrum of having to live with very high management costs - different IT staff with different types of expertise and experience having to manage networking and storage domains separately and completely independent of each other. But replacing everything in their datacenter with Ethernet and iSCSI IP SANs despite their lucrative cost had a number of issues. For one thing IP-based Ethernet is subject to variable latencies called latency jitter. Left alone, severe latency jitter can even down applications. IP networks can use TCP/IP’s recovery and flow control capabilities to control latency effects up to a point, but TCP/IP overhead is an unacceptable burden for FC traffic. The holy grail – Needs of NextGen DataCenter Needs Having to deal with overlapping networks (IP, FC, IB), IT organizations incur cost in numerous ways. The vision of I/O consolidation and unification equates to creating and operating a single network instead of three, the ability of an adapter, switch, and/or storage system to use one physical infrastructure that can carry. This means purchasing fewer equipment and spares (host bus and server adapters, cables, switches, and storage systems) while drastically reducing ongoing management and maintenance costs (power/cooling, administrative and training etc.). One of the primary enablers of I/O consolidation and unification is 10-Gigabit Ethernet, a technology with bandwidth and latency characteristics sufficient to support multiple traffic flows on the same network link. But the holy grail for datacenter designers has always been to design a network that can leverage the ubiquity of low cost Ethernet, be a single converged network that can carry both the network and storage I/O traffic with very different characteristics, be able to handle their different requirements efficiently to enable lowering the equipment costs and even more importantly lowering the management costs through by using single type of management tools that will simplify administrative tasks and training. Another requirement is to support block-based storage (vs. a file-based storage) that can provide flexibility of choosing latency vs. bandwidth metrics to meet performance, availability requirements of various workloads and yet be agnostic to the OS deployed. Rise of a Converged Network The solution to true convergence of disparate networks lay in devising a scheme enhance the Ethernet in such a way so it can transport mission-critical data by running FC over lossless Layer 2 Ethernet. In order to retain the benefit of low cost Ethernet everywhere while addressing the strength of FC as the dominant storage system interconnect in large datacenters for serious business continuity, backup and disaster recovery operations and Ethernet for FC SAN connectivity and networking was devised. The Fibre Channel over Ethernet (FCoE) protocol specification maps Fibre Channel natively over Ethernet and is independent of the Ethernet forwarding scheme. It allows an evolutionary approach to I/O consolidation by preserving all Fibre Channel constructs; maintaining the latency, security, and traffic management attributes of FC; and preserving investments in tools, training and FC SANs.

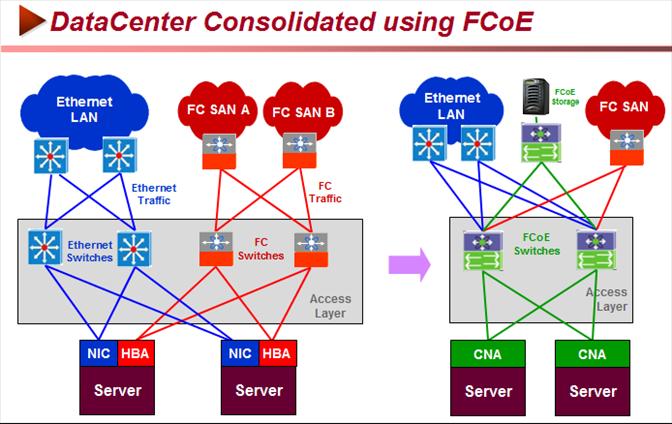

In wake of this anomaly of different separate networks for different workloads (OLTP, Business Intelligence, High Performance Computing, Web 2.0 etc – for incisive look see IMEX Report Next Gen Data Center Industry Report), fierce debate has raged over future of interconnects creating a major uncertainty and headache for IT decision-makers: Will Fibre Channel adoption outpace iSCSI deployments or will new IP SANs developments make it robust and become a viable alternative for high end storage solutions as well? Should Fibre Channel SANs be deployed throughout the organization now, believing that Fibre Channel has a long-term future or will all storage-host interconnection eventually go to iSCSI? What is the chance of IB displacing FC in medium size organization and iSCSI and IB become the mainstays? FCoE enables FC traffic to run over Ethernet with no performance degradation and without requiring any changes to the FC frame. It supports SAN management domains by maintaining logical FC SANs across Ethernet. Since FCoE allows a direct mapping of FC over Ethernet, it enables FC to run over Ethernet with no (or minimal) changes to drivers, SW stacks, and management tools.

With Fibre Channel over Ethernet, IT organizations can incorporate FCoE-aware Ethernet switches into the access layer and converged network adapters or server adapters with an FCoE initiator at the host layer. This simplifies the network topology so that only a single pair of adapters and a single pair of network cables are needed to connect each server to both the Ethernet and the Fibre Channel networks. The FCoE-aware switches separate LAN and SAN traffic, providing seamless connectivity to existing storage systems. FCoE is intended primarily as a way to unify FC and Ethernet networks, but it may also have an impact on other technologies being used in enterprise SANs and data centers.

Meeting the Challenge with FCoE and CEE Network-only 10GbE does not have the same requirements around data qualification and interoperability that storage does, However, allowing FC and Ethernet storage traffic to run over a converged fabric requires significant work in order to make 10GbE Ethernet a lossless, native network layer. Although Ethernet already has some ability to isolate traffic using Virtual Local Area Network (VLAN) protocol, and service differentiation with the Quality of Service (QoS) protocol, however, Ethernet is still prone to network congestion, latency, and frame dropping – an unacceptable state of affairs for a converged network solution. The Ethernet ‘pause’ frame was designed earlier in Ethernet for flow control but rarely implemented because it pauses the entire traffic on the link and fails the criterion of selective pause, set for converged network.

FCoE - Technology Overview Key to the unification of storage and networking traffic in next generation datacenters is a new enhanced Ethernet standard called Converged Enhanced Ethernet (CEE) that is being adopted by major suppliers. FCoE heavily depends on CEE. This new form of Ethernet includes enhancements that make it a viable transport for storage traffic and storage fabrics without requiring TCP/IP overheads. These enhancements include the Priority-based Flow Control (PFC), Enhanced Transmission Selection (ETS), and Congestion Notification (CN).

Enhancements in CEE Specifications include:

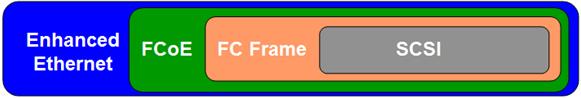

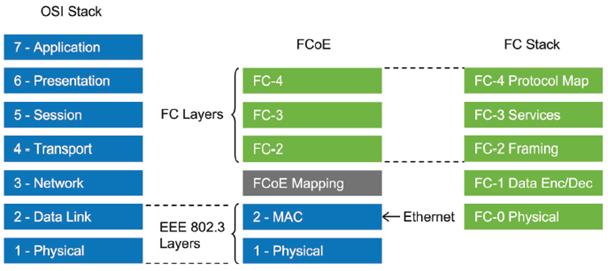

FCoE hosted on 10 Gbps Enhanced Ethernet extends the reach of Fibre Channel (FC) storage networks, allowing FC storage networks to connect virtually every datacenter server to a centralized pool of storage. Using the FCoE protocol, FC traffic can now be mapped directly onto Enhanced Ethernet. FCoE allows storage and network traffic to be converged onto one set of cables, switches and adapters, reducing cable clutter, power consumption and heat generation. FCoE is designed to use the same operational model as native Fibre Channel technology. Services such as discovery, world-wide name (WWN) addressing, zoning and LUN masking all operate the same way in FCoE as they do in native FC Storage management using an FCoE interface has the same look and feel as storage management with traditional FC interfaces. Mapping FC stack on FCoE FCoE provides the capability to carry the FC-2 layer over the Ethernet layer. This allows Ethernet to transmit the upper Fibre Channel layers FC-3 and FC-4 over the IEEE 802.3 Ethernet layers. Using IEEE 802.1Q tags, Ethernet can be configured with multiple virtual LANs (VLANs) that partition the physical network into multiple separate and secure virtual networks. Using VLANs, FCoE traffic can be separated from IP traffic so that the two domains are isolated and one network cannot be used to view traffic on the other.

Because the complete Fibre Channel frame is preserved by using FCoE, all traditional FC management functions such as zoning and LUN masking are preserved. Zones and zone sets are created and managed by switches in the same fashion and LUN masking is performed in the same manner as with traditional Fibre Channel fabrics. Application servers view storage LUNs in the same manner whether they are presented with standard FC technology or with FCoE technology. As storage systems equipped with native FCoE interfaces come to market, IT organizations can integrate them into their data center networks by connecting them directly into the FCoE fabric; over time, data centers can migrate to an end-to-end converged fabric that uses FCoE-aware initiators, switches, and storage targets while maintaining their traditional Fibre Channel management tools. FCoE: Pros & Cons The business benefits of this improved topology include reduced cost and complexity, better and flexible performance, and reduced power consumption—all while providing seamless connectivity with existing Ethernet and storage networks.

Reduced Cabling Spaghetti Extending FC into the Future The Fibre Channel over Ethernet (FCoE) protocol may still be in the early stages of development, but its capabilities to consolidate separate storage and data networks into a single unified architecture in the data center has a lot of potential and value. SMBs, ROBOs and Medium Enterprise will also strongly benefit from the lossless Ethernet adaption in CEE from the strong economies of scale and management simplicity that many iSCSI SANs provide. Some of the leading storage system companies such as Emulex, QLogic, Brocade, Cisco, EMC, IBM, HP, HDS, Sun and others having a lot invested in FC and enjoying excellent margins on their FC products, started to feel the heat and recognized the threat posed by incursion from iSCSI products riding on the popularity of Ethernet everywhere and the associated price erosions driven by volume driven economics. So it is but natural that they are all actively supporting FCoE and CEE efforts, in order to extend the life of FC. Their logic and motivation to provide extensions to the classical Ethernet are understandable for financial (and technical reasons). In spite of all of iSCSI’s qualities – an all IP environment, TOE/RDMA engines, low cost implementations etc., it still rides on TCP/IP and is lossy. Cisco, Emulex and Brocade have taken an aggressive stance towards promoting the FCoE effort and driving the development of the standard, and have a lot riding on it. Even QLogic, who seems to have a leg in both camps, will ultimately benefit from this as will all players with strong FC products. But it is not only the FC vendors who will benefit, in our view CEE tide will raise both boats – FCoE and iSCSI and in the end it is the end users who benefit the most from open standards. Supplier Companies Industry heavyweights with strong FC investments are at the heart of the movement. Many startups like Woven Systems are actively introducing related products into the market. Components: Brocade, Fujitsu Limited, Intel, LSI, PMC-Sierra, Mellanox, Vitesse Semconductor Software: Neoscale Systems, VMWare Testers: Finisar Storage Subsystems: EMC, Emulex, Hitachi Data Systems,NetApp, Qlogic Systems: Cisco, Fujitsu-Siemens, Hewlett Packard, IBM, Sun Microsystems IMEX Industry Report – FCoE in NGDC 2009 Synopsis IMEX Research‘s Industry Report on FCoE in NexGen DataCenter 2009 addresses the need to fully understand the strategic and tactical directions the IT industry vendors are taking in formulating the future of Networking and Storage converged fabric products that will be deployed in Data Center LANs, SANs and WANs. It compares existing FC and iSCSI SAN protocols versus the upcoming FCoE (Fiber Channel over Ethernet) running on CEE (Converged 10GbE Enhance Ethernet) using various criteria to meet the SLA needs of CIOs for their data centers and migration strategies required for protection of existing investment. It also provides detailed guidance for IT infrastructure vendors and IT decision makers on how to optimally deploy these convergent storage and networks that best meet their mission-critical operational needs while significantly improving the TCO/ROI of their datacenter operations.

For the technical and marketing managers, the report enumerates various technologies, architectures and go-to-market solutions for vendors. It compare the advantages of using FCoE against other storage technologies, specifically iSCSI and InfiniBand, and explains if, when and where FCoE is likely to make competitive market inroads against them. Based on interviews with end-users from different verticals, a number of use case solutions are explored targeted for different environments since FCoE can be deployed in a variety of topologies, either as an addition to existing storage and data infrastructure, or as the basis of a completely new network in greenfield sites. Target Audience The report provides critical data and an incisive analysis for a range of industry participants, including:

Subjects analyzed

Major chapters

|

||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

| Click on the following for additional information or go to http://www.imexresearch.com IMEX Research, 1474 Camino Robles San Jose, CA 95120 (408) 268-0800 http://www.imexresearch.com |